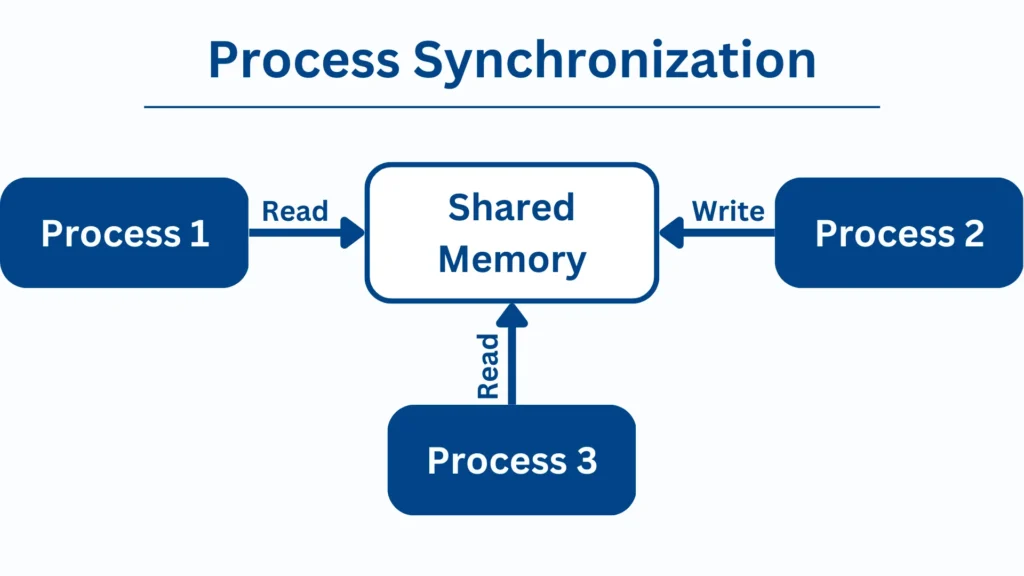

This article will discuss process synchronization, which is the coordination of several threads or processes in operating systems and concurrent programming to guarantee resource sharing and orderly execution. This synchronization is essential because it avoids conflicts that can cause data corruption.

We’ll examine how important it is to preserve system stability, enhance effectiveness, and permit secure concurrent access to shared resources. For more insights on this, check out our detailed post on OS Directory Structure.

Importance of Process Synchronization

Process synchronization is essential for computer systems and concurrent programs to run smoothly and effectively. This is the reason it’s vital:

- Preventing Resource Conflicts: By preventing several processes or threads from accessing shared resources simultaneously, synchronization mechanisms like mutexes and semaphores help ensure data integrity and prevent data corruption.

- Improving System Stability: Systems can prevent race and deadlock situations that might otherwise cause apps to crash or freeze by enforcing synchronization, improving system stability.

- Enhancing Performance: By permitting concurrent work execution without interruption, efficient synchronization promotes faster and more receptive applications and better uses of system resources.

- Encouraging Cooperation: Synchronization methods let processes or threads communicate and coordinate with one another, allowing them to cooperate to accomplish complex tasks or goals.

Critical Section Problem

The code segment that accesses common resources and necessitates synchronization to prevent data corruption is known as the critical section problem. Consistent concurrent programming ensures that only one thread or process changes shared data simultaneously. Examples include:

- Processing concurrent transactions in an online banking system.

- Controlling network printer access.

- Updating a shared database.

Proper synchronization in significant portions is necessary for such systems to operate securely and dependably.

Requirements for Process Synchronization

Mutual Exclusion

Thanks to mutual exclusion, a shared resource can only be accessed by one process or thread at a time. This avoids simultaneous changes that can cause inconsistent or corrupted data. In multi-threaded or multi-process contexts, it is necessary to provide predictable behavior and preserve data integrity.

- Algorithms: In concurrent programming, Dekker’s algorithm and Peterson’s solution are the traditional algorithms for achieving mutual exclusion.

- Peterson’s solution: Coordinates entrance into crucial areas between two processes using standard variables and flags. It guarantees separation from one another and avoids impasse.

The two algorithms showcase distinct methods for attaining mutual exclusion in concurrent systems, each with advantages and factors to consider regarding effectiveness and security.

Progress

Concurrent system progress guarantees that processes eventually proceed and do not stay permanently stalled. This is accomplished by Lamport’s bakery algorithm

- Bakery algorithm: The Bakery Algorithm offers a mutual exclusion solution. It uses numbers to assign priorities for sharing resources, similar to a bakery line. This ensures progress and fairness.

- Lamport’s Bakery Algorithm: This improves on the Bakery algorithm by employing Lamport timestamps to settle disputes amongst processes. This guarantees systematic access to essential parts and eventually advances without hunger.

Bounded Waiting

In concurrent programming, bounded waiting ensures that no process is perpetually delayed from reaching a crucial portion. Fairness is ensured by methods and algorithms such as the “turnstile” approach or sophisticated scheduling policies:

- Turnstile Method: This method ensures fairness and limited waiting times by using a ticket-based system in which processes obtain and release tickets to access crucial portions.

- Scheduling Policies: Use techniques such as round-robin scheduling or fair queuing to ensure that processes don’t wait endlessly for resources.

These methods keep the system responsive and avoid situations when vital resources are arbitrarily denied to processes.

Synchronization Constructs and types

In concurrent programming, semaphores are synchronization primitives that regulate access to shared resources:

- Meaning and Procedures: Semaphores use counting to manage access. {Signal () } increases the count (arousing waiting processes), and {Wait () } decreases it (waiting if it’s zero).

- Examples of Implementation: For instance, semaphores guarantee that producers wait until the buffer is filled ({Wait}) and notify consumers when items are added ({Signal}), effectively controlling concurrent access in a producer-consumer situation.

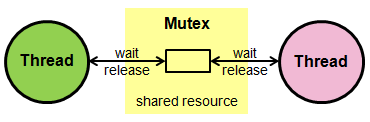

Mutexes (Mutual Exclusion Locks)

To prevent inconsistent data, Mutexes, also known as mutual exclusion locks, make sure that only one thread can access a shared resource at once:

Goal and Application: Mutexes are essential for safeguarding important code segments where shared data is accessed and changed. They provide data integrity by enabling threads to coordinate their access.

Monitors

In concurrent programming, monitors are a high-level abstract data type that is used to manage shared resources:

Abstract Data Type: Monitors contain shared data and the processes (methods) that use it. They automatically maintain mutual exclusion, limiting the number of threads running monitor processes concurrently to one.

Process Synchronization in C++

#include <iostream> <thread> <mutex>

std::mutex mtx; // Mutex for synchronization

void shared_print(const std::string& msg, int id) {

std::lock_guard<std::mutex> lock(mtx); // Locks the mutex for this scope

std::cout << “Thread ” << id << “: ” << msg << std::endl;

}

void thread_function(int id) {

for (int i = 0; i < 5; ++i) {

shared_print(“Printing from thread function”, id);

}

}

int main() {

std::thread t1(thread_function, 1);

std::thread t2(thread_function, 2);

t1.join();

t2.join();

return 0;

}

Synchronization in Real-World Applications

Operating systems such as the Linux kernel use monitors to manage important resources like files and network connections, guaranteeing data integrity and avoiding conflicts between concurrent access requests. Monitors are used in multi-threaded programming languages like Python and Java to synchronize access to shared variables or objects.

Conclusion

In computing, process synchronization guarantees resource sharing and efficient operation. Strategies like monitors, semaphores, and Mutexes avoid conflicts and preserve program correctness and data integrity. Successful synchronization is essential for dependable and successful software execution, whether in operating systems or multi-threaded applications.

Frequently Asked Questions (FAQ’S)

How does process synchronization work?

The coordination of threads or processes to guarantee resource sharing without conflict is known as process synchronization.

What makes process synchronization necessary?

When various threads or processes access shared resources simultaneously, race conditions and inconsistent data must be avoided.

How does a mutex function?

A mutex restricts the number of threads that can access a resource simultaneously. When a resource is locked by another thread, any attempts to access it by that thread will be blocked until the resource is unlocked.